Falling Down Bad

How averages can be deceptively attractive, and what we can do to stop falling down bad for them

Bill Gates walks into a bar, and everybody in the bar — on average — becomes a millionaire.

Then everybody in that bar is a millionaire, right?

Right?

A good theory can explain why things happen; they provide insight through general principles independent of the thing being explained.

Mathematics has a lot of good theories.

Statistics is one of them, and it’s quite meditative.

Statistics provides a bag of tools to approach different problems from multiple angles. It’s a mathematical approach to describing things, predicting events, and analyzing relationships between things. It helps us understand cause and effect and project the future.

Imagine a world where the only way to predict the outcome of a decision is to make the decision and find out.

Getting cause and effect right allows humans to cheat evolution; by successfully predicting outcomes, we can see parallel worlds and choose the ones where we survive.

Obviously, most decisions are not a matter of life or death.

But most poor decisions are made by neglecting the limits of statistics.

The most important decisions we make are often made in the face of uncertainty.

We can never see the full picture of our real-world problems. But that doesn’t stop us from painting the fuller picture; by gaining different perspectives, we clear unknowns, cover up our blind spots, and spot biases and errors in our decisions before we make them.

Despite the predictive power of a good theory, our decision-making will always be imperfect because we make decisions based on imperfect knowledge.

How we see risk shapes our decision-making.

Let’s explore this through a thought experiment.

path to freedom

Imagine freedom — whatever that means to you — is obtained by crossing a river.

The river is four feet deep on average.

The successful few who made it across boast that it was simply walking the width of the river — a simple path to freedom.

Would you risk crossing this river to be free?

For the sake of this experiment, let’s say you do.

The average depth of this river is four feet. That sounds walkable, assuming the depth is consistently four feet. On top of that, those who successfully made it across agree.

Everything you know so far suggests you cross.

So you roll up your sleeves and begin your path to freedom.

The current is calm, and your footing is steady. The water rises from your knees, your hips, then to your chest by your 100th step. Your first 100 steps have increased linearly in depth, so the next step should be safe.

It was not.

The 101st step was much steeper than expected. You lose your footing, sink, and join your fellow monkeys at the bottom.

seriously?

If you were so confident in your plan, how did you lose?

You probably spotted the fatal flaw in the plan and would not have crossed blindly, but thank you for playing out the thought experiment. ( 👍🏻ᐛ )👍🏻

After consulting with the other monkeys at the bottom, underlying bias is revealed: we focus too much on what we know and lose to what we don’t know.

“Don’t cross a river if it is four feet deep on average”

attractive averages

Theories are abstractions that filter out the signal from the noise.

Theories make sense of things. The danger lies in oversimplification — abstracting away the essence of a problem.

By inferring that the river was consistently four feet deep based on its average depth, we make a costly oversimplification, as demonstrated by the monkeys at the bottom. They used the average as a map for the territory.

The danger lies when they confuse the map for the territory.

The monkey mind represents System 1 Thinking, our bias to save time and energy using heuristics — rule-of-thumb decision-making shortcuts.

Heuristics trade speed for accuracy when making decisions. They’re used in fight-or-flight responses and are convenient until they’re not.

Heuristics are prediction tools fine-tuned by evolution.

They allow us to map theory to practice without the cost of thinking.

For the monkey mind, they’re not a bug, they’re a feature.

Sometimes, these prediction tools are too fine-tuned and oversimplify cause and effect.

The world is full of edge cases — the extreme, the unknown, and the very improbable events that tend to dominate outcomes in our world.

And attractive averages discount edge cases.

Why was this average so attractive in the first place?

How do we focus too much on what we know and lose to what we don’t know?

The average, in this case, was deceptively attractive because of the following:

narrative fallacy

survivorship bias

neglect of variability

narrative fallacy

It’s impossible for the monkey mind to understand all the features of the river in depth, so it compresses the unknown, varying depths into the story of the average.

The average was the map of the territory.

It’s easier to remember a story that makes sense of things. But stories can deceptively make sense. This average was a very attractive story to the monkey because it made crossing the river easy. The monkey could taste freedom by believing the river was safe to cross by misinterpreting the average as the constant depth of the river.

The narrative fallacy materializes when an inaccurate connection is forced between two points in a story to make it easier to follow.

Solution: Give more weight to data over stories. By offsetting bias towards stories with a more data-driven approach, you rebalance your decision-making equations.

survivorship bias

Another self-serving bias at play is the monkey mind’s tendency to cherry-pick information to push for this attractive narrative of the average.

The role of incomplete knowledge shapes how we make decisions.

In this case, we only heard from those that succeeded and never heard from those who never made it; since they were at the bottom, their voice never reached our ears.

Survivorship bias materializes when we conflate the absence of evidence for evidence of absence.

When all we hear are success stories, we set ourselves up for failure.

Success stories make us feel good, but do they make us do good?

History is usually written by winners and rarely by losers.

Solution: What we don’t know is far more relevant than what we know.

It’s more realistic to avoid errors than to achieve success.

Test your assumptions against reality. Play devil’s advocate and actively try to falsify your assumptions: “How will this plan fail? What evidence disproves this?“ If you can’t find evidence, keep looking — absence of evidence is not evidence of absence.

neglect of variability

Statistics is storytelling with data, and averages are the most common story told.

The average is the location of “home” for data points.

It’s where data “points” to when heading home after a long day of work plotting. The location of “home” for data is called the central tendency, and this location can be very attractive.

Bill Gates walks into a bar, and everybody in the bar — on average — becomes a millionaire. Then everybody in that bar is a millionaire, right?

If I were in that bar, I’d want to believe this is true.

But averages are predictions, after all. And what makes a prediction reliable is how it’s abstracted. For example, if I were to predict where I’d be in May, I’m confident predicting I’ll be in Africa to climb Kilimanjaro. I would not be confident predicting the exact latitudinal or longitudinal coordinates or weather conditions.

So the level of abstraction of the prediction matters. The quality and quantity of the sample data determine this abstraction. For example, a prediction based on a sample size of 1 is no longer a story but a fact. A prediction based on a biased data set will be a one-sided story. These stories are not useful because they don’t tell us anything new. They don’t provide any angles to paint a fuller picture.

Data matters. When outliers dominate, the story of the average cannot be trusted.

Solution:

Paint a fuller picture by considering alternative perspectives from other statistical tools — median, mode, standard deviation, the list goes on… These are just different angles of spotlighting potential errors, biases, and blind spots in your sample data.

“You can fool all the people some of the time and some of the people all the time, but you cannot fool all the people all the time.”

taming uncertainty

“If the only tool you have is a hammer everything looks like a nail.”

I only looked at averages to make “data-driven” decisions.

If the data is biased, data-driven decisions will also be biased.

I’m becoming more skeptical when evaluating statistics that push for a narrative.

Just because a decision was data-driven doesn’t mean it’s a good decision.

Every decision for a real-world problem is made in the face of uncertainty. There will always be some incomplete knowledge — some absence of evidence.

As a result, every decision is imperfect. There is no “perfect” decision. To make better decisions, just make decisions that are less wrong.

The question is not whether your decision is biased or not — spoilers: it is — but

how much bias, how much incomplete knowledge will you tolerate in your decision-making?

In other words, how will you tame uncertainty in your decision-making

How we frame risk shapes our decision-making.

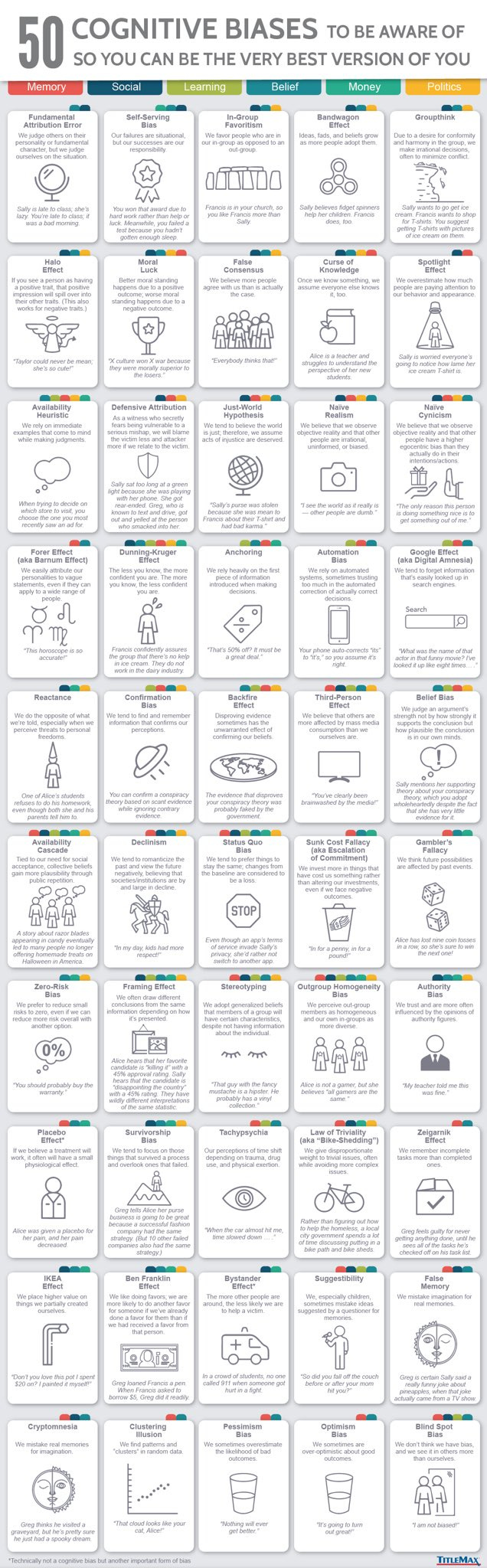

Developing self-awareness of our heuristics and fallacies goes a long way in spotting biases and errors before we make them. I’ve identified a few of many: narrative fallacy, survivorship bias, and neglect of variability. Here are some more.

I fell down bad for the attractive average and saw how easy it was for others to fall too.

I’ll end with an open-ended question:

What are some attractive averages people fall down bad for?

I’ll explore more on taming uncertainty in the next article, so follow me on Twitter for a sneak peek!